Mitigate the Negative TL using Adaptive Thresholding for Fault Diagnosis

Image credit:

Unsplash

Image credit:

Unsplash

Abstract

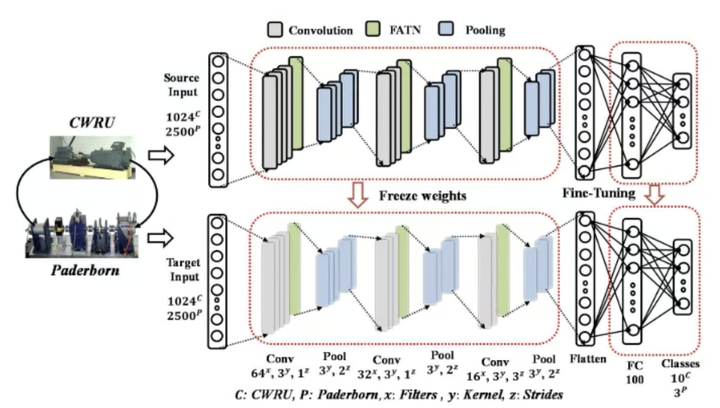

The fourth industrial revolution has created a data-centric ecosystem where the implementation of Prognostics and Health Management (PHM) technology is crucial to support contemporary industrial systems. To enhance performance in fault diagnosis and health assessment of mechanical equipment, Deep Learning (DL) has been integrated into PHM. However, DL models encounter several challenges in PHM, such as the requirement for large amounts of labeled data and a lack of generalizability. TL (TL) has emerged as a promising technique to overcome these limitations. Fine-tuning, a commonly used approach to the inductive transfer of deep models, assumes that the source and target tasks are related and that pre-trained parameters from the source task are likely to be close to the optimal parameters for the target task. Nevertheless, when the amount of training data on the target domain is limited, fine-tuning can lead to negative transfer and catastrophic forgetting. To overcome these issues, we propose a novel regularization approach to selectively modulate the features of normalized inputs based on their distance from the mini-batch mean during fine-tuning. Our approach aims to prevent the negative transfer of pre-trained knowledge that is irrelevant to the target task and mitigate catastrophic forgetting. Furthermore, our approach yields a 0.9-5% increase in accuracy under the same environment and 2.8-6.2% under different environmental conditions, compared to other state-of-the-art regularization-based methods.

Supplementary notes can be added here, including code and math.